Some neat little bug-fixes, just in time for Christmas:

Some neat little bug-fixes, just in time for Christmas:

Quite a few goodies in this release:

Three exciting changes this time around:

Some neat refinements this time around:

Just a quick bug-fix release to roll back two of the Interceptor/Relocator arm64 changes that went into the previous release. Turns out that these need some more refinement before they can land, so we will roll them back for now.

Since our last release, @hsorbo and I had a lot of fun pair-programming on a wide range of exciting tech. Let’s dive right in.

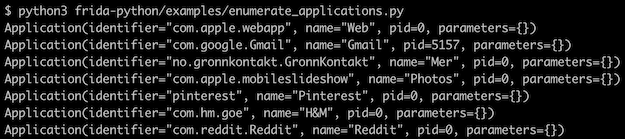

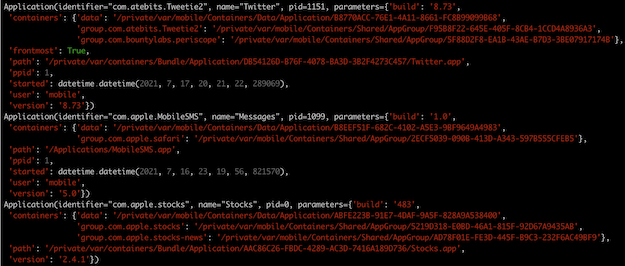

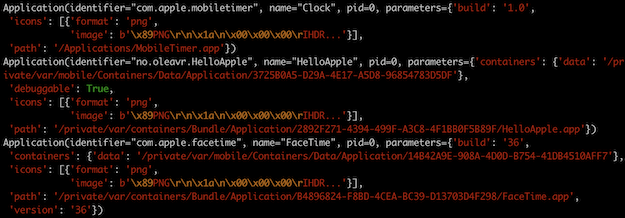

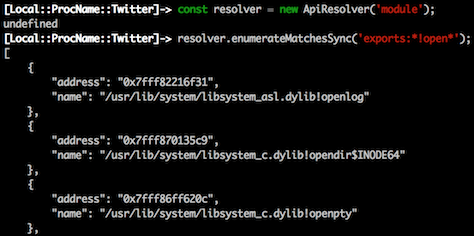

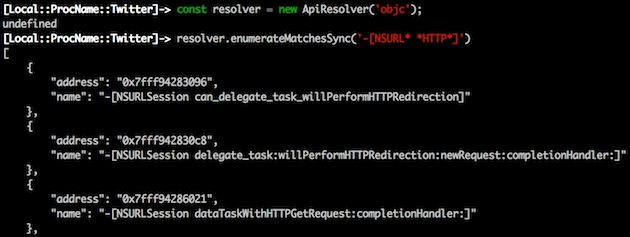

We’ve introduced a brand new ApiResolver for Swift, which you can use like this:

const r = new ApiResolver('swift');

r.enumerateMatches('functions:*CoreDevice!*RemoteDevice*')

.forEach(({ name, address }) => {

console.log('Found:', name, 'at:', address);

});There’s also a new and exciting frida-tools release, 12.3.0, which upgrades frida-trace with Swift tracing support, using the new ApiResolver:

$ frida-trace Xcode -y '*CoreDevice!*RemoteDevice*'Our Module API now also provides enumerateSections() and enumerateDependencies(). And for when you want to scan loaded modules for specific section names, our existing module ApiResolver now lets you do this with ease:

const r = new ApiResolver('module');

r.enumerateMatches('sections:*!*text*/i')

.forEach(({ name, address }) => {

console.log('Found:', name, 'at:', address);

});There’s also a bunch of other exciting changes, so definitely check out the changelog below.

Enjoy!

Some exciting improvements this time around:

Time for a new release, just in time for the weekend:

Time for a new release to refine a few things:

Only a few changes this time around:

For quite some years I’ve been dreaming of taking Frida beyond user-space software, to also support instrumenting OS kernels as well as barebone systems. Perhaps even microcontrollers…

Earlier this year my family’s cat door broke down. After some back and forth with the retailer, double-checking the installation and such, it would work for a little while before it eventually started malfunctioning.

This was obviously not much fun for our cats:

It’s also no surprise that they’d end up making a lot of noise, in turn making it hard to get a good night’s sleep when having to get up to let them back in manually.

I ended up buying a second cat door and, lo and behold, no more issues. The old one ended up collecting dust for a while. Something I kept thinking of was whether I could debug it, and perhaps even extend the software to do more useful things.

Feeling the urge to open it up to poke at the electronics inside of it, I eventually gave in:

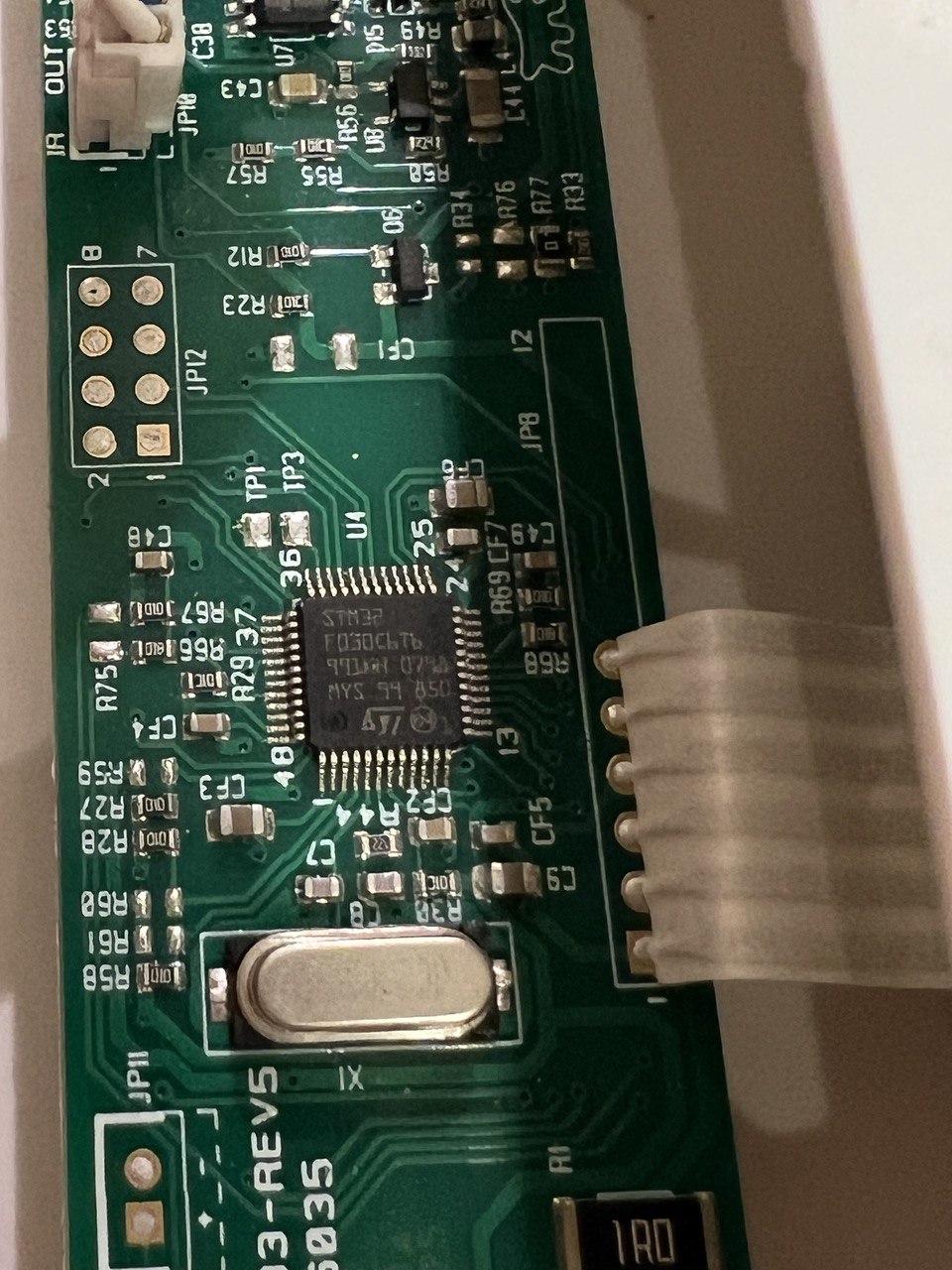

That looked like an STM32F030C6T6, which is an ARM Cortex M0-based MCU. My first thought was whether I could dump the flash memory to do some static analysis.

After quickly skimming MCU docs, and a little bit of multimeter probing, I figured out the JP12 pads:

| PAD 1/2 | PAD 7/8 | ||

|---|---|---|---|

| BOOT0 | USART1 RX | SWDIO | GND |

| VDD | USART1 TX | SWCLK |

This made it easy to pull BOOT0 high, so the MCU boots into its internal bootloader instead of user code.

By hooking up a USB to 3.3V TTL device to the USART1 pads, I could dump the flash:

$ ./stm32flash -r firmware.bin /dev/ttyUSB0

stm32flash 0.7

http://stm32flash.sourceforge.net/

Interface serial_posix: 57600 8E1

Version : 0x31

Option 1 : 0x00

Option 2 : 0x00

Device ID : 0x0444 (STM32F03xx4/6)

- RAM : Up to 4KiB (2048b reserved by bootloader)

- Flash : Up to 32KiB (size first sector: 4x1024)

- Option bytes : 16b

- System memory : 3KiB

Memory read

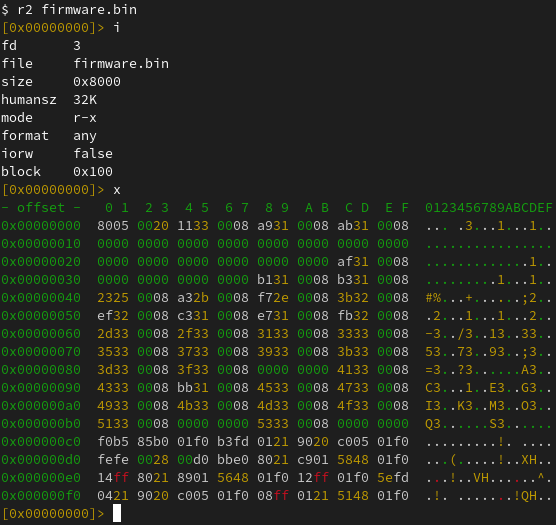

Read address 0x08008000 (100.00%) Done.And perform some static analysis:

Seeing as two of the other pads are connected to SWDIO and SWCLK, used for Serial Wire Debug (SWD), the natural next step was to hook up a Raspberry Pi Debug Probe to those. After getting that set up, I fired up OpenOCD:

$ openocd -f interface/cmsis-dap.cfg -f target/stm32f0x.cfg

Open On-Chip Debugger 0.11.0-g8e3c38f7-dirty (2023-05-05-14:25)

Licensed under GNU GPL v2

For bug reports, read

http://openocd.org/doc/doxygen/bugs.html

Info : auto-selecting first available session transport "swd". To override use 'transport select <transport>'.

Info : Listening on port 6666 for tcl connections

Info : Listening on port 4444 for telnet connections

Info : Using CMSIS-DAPv2 interface with VID:PID=0x2e8a:0x000c, serial=E6614103E78B482F

Info : CMSIS-DAP: SWD Supported

Info : CMSIS-DAP: FW Version = 2.0.0

Info : CMSIS-DAP: Interface Initialised (SWD)

Info : SWCLK/TCK = 0 SWDIO/TMS = 0 TDI = 0 TDO = 0 nTRST = 0 nRESET = 0

Info : CMSIS-DAP: Interface ready

Info : clock speed 1000 kHz

Info : SWD DPIDR 0x0bb11477

Info : stm32f0x.cpu: hardware has 4 breakpoints, 2 watchpoints

Info : starting gdb server for stm32f0x.cpu on 3333

Info : Listening on port 3333 for gdb connectionsThe idea I had been thinking about for a long time was to add a new Frida backend where the only process you can attach to is PID 0. Any scripts loaded there would actually be run locally, and implement the familiar JavaScript API. Any API that accesses memory, such as when dereferencing an int * by doing e.g. ptr(‘0x80000’).readInt(), would end up querying the target, through SWD in the above case.

I initially started sketching this where the backend would talk to the OpenOCD daemon through its telnet interface. But I quickly realized that it would be better to talk to its GDB-compatible remote stub. In this way, Frida will be able to instrument any target with an available remote stub. Whether that’s OpenOCD, Corellium (iOS kernel instrumentation!), QEMU, etc.

As for Interceptor, my thinking was that basic functionality would be implemented using breakpoints. But, only if the user supplies JavaScript callbacks. If function pointers are supplied instead, we could perform inline hooking so the target can run without any traps/ping-pongs with the host. This means it could even be used for observing and modifying hot code inside an OS kernel or MCU firmware.

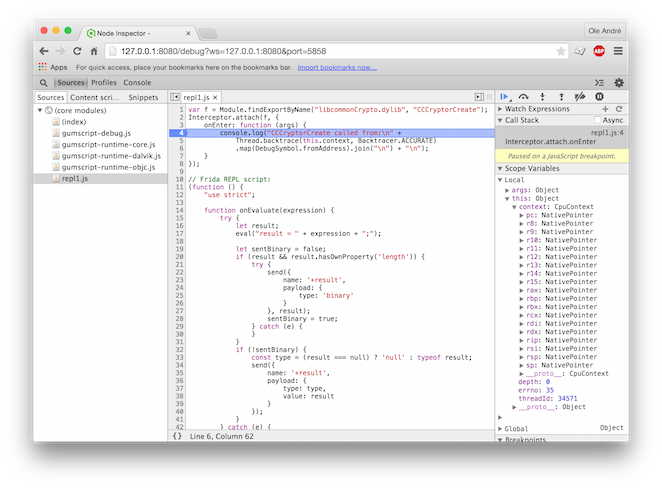

After some initial sketching, I was able to run the following script:

Interceptor.breakpointKind = 'hard';

const THUMB_BIT = 1;

const initRest = ptr('0x0800306a').or(THUMB_BIT);

Interceptor.attach(initRest, {

onEnter(args) {

console.log('>>> init_rest()',

JSON.stringify(this.context, null, 2));

},

onLeave(retval) {

console.log(`<<< init_rest() retval=${retval}`);

}

});Using the Frida REPL:

$ frida -D barebone -p 0 -l demo.js

____

/ _ | Frida 16.1.0 - A world-class dynamic instrumentation toolkit

| (_| |

> _ | Commands:

/_/ |_| help -> Displays the help system

. . . . object? -> Display information about 'object'

. . . . exit/quit -> Exit

. . . .

. . . . More info at https://frida.re/docs/home/

. . . .

. . . . Connected to GDB Remote Stub (id=barebone)

[Remote::SystemSession ]-> $gdb.continue()

[Remote::SystemSession ]-> >>> init_rest() {

"r7": "0xffffffff",

"pc": "0x800306a",

"r8": "0xffffffff",

"xPSR": "0x41000000",

"r9": "0xffffffff",

"sp": "0x20000578",

"r0": "0x0",

"r10": "0xffffffff",

"lr": "0x8003069",

"r1": "0x40021008",

"r11": "0xffffffff",

"r2": "0xffffffff",

"r12": "0xffffffff",

"r3": "0xffffffff",

"r4": "0xffffffff",

"r5": "0xffffffff",

"r6": "0xffffffff"

}

<<< init_rest() retval=0x1A few things to note here:

While my fun little cat door side-quest is a great test case for the tiny part of the spectrum, there’s also a lot of potential in supporting larger systems.

One of the cooler use-cases there is definitely Corellium, as it means we can instrument the iOS kernel. Using a Tamarin Cable it should even be possible to get this working on a checkm8-exploitable physical device.

Before we touch on that though, let’s see if we can get things going with QEMU and a live Linux kernel.

First, we’ll fire up a VM we can play with:

$ pip install arm_now

$ arm_now start aarch64 --add-qemu-options='-gdb tcp::9000'

...

Welcome to arm_now

buildroot login:Next, we’ll use the Frida REPL to look around:

$ export FRIDA_BAREBONE_ADDRESS=127.0.0.1:9000

$ frida -D barebone -p 0

____

/ _ | Frida 16.1.0 - A world-class dynamic instrumentation toolkit

| (_| |

> _ | Commands:

/_/ |_| help -> Displays the help system

. . . . object? -> Display information about 'object'

. . . . exit/quit -> Exit

. . . .

. . . . More info at https://frida.re/docs/home/

. . . .

. . . . Connected to GDB Remote Stub (id=barebone)

[Remote::SystemSession ]-> Process.arch

"arm64"

[Remote::SystemSession ]-> Process.enumerateRanges('r-x')

[

{

"base": "0xffffff8008080000",

"protection": "r-x",

"size": 4259840

}

]

[Remote::SystemSession ]-> $gdb.state

"stopped"

[Remote::SystemSession ]-> $gdb.exception

{

"breakpoint": null,

"signum": 2,

"thread": {}

}

[Remote::SystemSession ]-> $gdb.exception.thread.readRegisters()

{

"cpsr": 1610613189,

"pc": "0xffffff8008096648",

"sp": "0xffffff80085f3f10",

"x0": "0x0",

"x1": "0xffffff80085e6b78",

"x10": "0x880",

"x11": "0xffffffc00e877180",

"x12": "0x0",

"x13": "0xffffffc00ffe1f30",

"x14": "0x0",

"x15": "0xfffffff8",

"x16": "0xffffffbeff000000",

"x17": "0x0",

"x18": "0xffffffc00ffe17e0",

"x19": "0xffffff80085e0000",

"x2": "0x40079f5000",

"x20": "0xffffff80085f892c",

"x21": "0xffffff80085f88a0",

"x22": "0xffffff80085ffe80",

"x23": "0xffffff80085ffe80",

"x24": "0xffffff80085d5028",

"x25": "0x0",

"x26": "0x0",

"x27": "0x0",

"x28": "0x405a0018",

"x29": "0xffffff80085f3f10",

"x3": "0x30c",

"x30": "0xffffff800808492c",

"x4": "0x0",

"x5": "0x40079f5000",

"x6": "0x1",

"x7": "0x1c0",

"x8": "0x2",

"x9": "0xffffff80085f3e80"

}

[Remote::SystemSession ]->You might wonder how we’ve implemented Process.enumerateRanges(). This part is for now only implemented on arm64, and it is accomplished by parsing the page tables. (And if we’re talking to Corellium’s remote stub we use a vendor-specific monitor command to save ourselves a lot of network roundtrips.)

So now that we’re peeking into a running kernel, one of the things we might want to do is find internal functions and data structures. This is where the memory- scanning API comes handy:

for (const r of Process.enumerateRanges('r-x')) {

console.log(JSON.stringify(r, null, 2));

const matches = Memory.scanSync(r.base, r.size,

'7b2000f0 fa03082a 992480d2 : 1f00009f ffffffff 1f00e0ff');

console.log('Matches:', JSON.stringify(matches, null, 2));

}Here we’re looking for the Linux kernel’s arm64 syscall handler, matching on its first three instructions. We use the masking feature to mask out the immediates of the ADRP and MOV instructions (first and third instruction).

Let’s take it for a spin:

$ frida -D barebone -p 0 -l scan.js

____

/ _ | Frida 16.1.0 - A world-class dynamic instrumentation toolkit

| (_| |

> _ | Commands:

/_/ |_| help -> Displays the help system

. . . . object? -> Display information about 'object'

. . . . exit/quit -> Exit

. . . .

. . . . More info at https://frida.re/docs/home/

. . . .

. . . . Connected to GDB Remote Stub (id=barebone)

Attaching...

{

"base": "0xffffff8008080000",

"size": 4259840,

"protection": "r-x"

}

Matches: [

{

"address": "0xffffff8008082f00",

"size": 12

}

]

[Remote::SystemSession ]->So now we’ve dynamically detected the kernel’s internal syscall handler! 🚀

Re-implementing the memory scanning feature was one of the highlights for me personally, as @hsorbo and I had a lot of fun pair-programming on it. The implementation is conceptually very similar to what we’re doing in our Fruity backend for jailed iOS, and our new Linux injector: instead of transferring data to the host, and searching that, we can get away with transferring only the search algorithm, to run that on the target.

The memory scanner implementation is written in Rust, and helped prepare the groundwork for a new cool feature I’m going to cover a bit later in this post.

So, now that we know where the Linux kernel’s syscall handler is, we can use Interceptor to install an instruction-level hook:

const el0Svc = ptr('0xffffff8008082f00');

Interceptor.attach(el0Svc, function (args) {

const { context } = this;

const scno = context.x8.toUInt32();

console.log(`syscall! scno=${scno}`);

});And try that out on our running VM:

$ frida -D barebone -p 0 -l kernhook.js

____

/ _ | Frida 16.1.0 - A world-class dynamic instrumentation toolkit

| (_| |

> _ | Commands:

/_/ |_| help -> Displays the help system

. . . . object? -> Display information about 'object'

. . . . exit/quit -> Exit

. . . .

. . . . More info at https://frida.re/docs/home/

. . . .

. . . . Connected to GDB Remote Stub (id=barebone)

[Remote::SystemSession ]-> $gdb.continue()

[Remote::SystemSession ]-> syscall! scno=63

syscall! scno=64

syscall! scno=73

syscall! scno=63

syscall! scno=64

syscall! scno=73

syscall! scno=63

syscall! scno=64

syscall! scno=56

syscall! scno=62

syscall! scno=64

syscall! scno=57

syscall! scno=29

syscall! scno=134

...And that’s it – we are monitoring system calls across the entire system! 💥

One of the first things you’ll probably notice if you try the previous example is that we slow down the system quite a bit. This is because Interceptor uses breakpoints when a JavaScript function is specified as the callback.

Not to worry though. If we write our callback in machine code and pass a NativePointer instead, Interceptor will pick a different strategy: it will modify the target’s machine code to redirect execution to a trampoline, which in turn calls the function at the address that we specify.

So that’s great. We only need to get our machine code into memory. Some of you may be familiar with our CModule API. We don’t yet implement that one in this new Barebone backend (and we will, eventually!), but we have something even better. Enter RustModule:

const kernBase = ptr('0xffffff8008080000');

const procPidStatus = kernBase.add(0x15e600);

const m = new RustModule(`

#[no_mangle]

pub unsafe extern "C" fn hook(ic: &mut gum::InvocationContext) -> () {

let regs = &mut ic.cpu_context;

println!("proc_pid_status() was called with x0={:#x} x1={:#x}",

regs.x[0],

regs.x[1],

);

}

`);

Interceptor.attach(procPidStatus, m.hook);The RustModule implementation uses a local Rust toolchain, assumed to be on your PATH, to compile the code you provide it into a no_std self-contained ELF. It relocates this ELF and writes it into the target’s memory. As part of this it will also parse the MMU’s page tables and insert new entries there so the uploaded code becomes part of the virtual address space, where the pages are read/write/execute.

In this example we’re hooking proc_pid_status() in our live Linux kernel.

Note that File.readAllText() can be used to avoid having to inline Rust code inside your JavaScript. Here we’re using inline code for the sake of brevity.

Now, with our Rust-powered agent, let’s take it for a spin:

$ frida -D barebone -p 0 -l kernhook2.js

____

/ _ | Frida 16.1.0 - A world-class dynamic instrumentation toolkit

| (_| |

> _ | Commands:

/_/ |_| help -> Displays the help system

. . . . object? -> Display information about 'object'

. . . . exit/quit -> Exit

. . . .

. . . . More info at https://frida.re/docs/home/

. . . .

. . . . Connected to GDB Remote Stub (id=barebone)

Error: to enable this feature, set FRIDA_BAREBONE_HEAP_BASE to the physical base address to use, e.g. 0x48000000

at <eval> (/home/oleavr/src/demo/kernhook2.js:13)

at evaluate (native)

at <anonymous> (/frida/repl-2.js:1)

[Remote::SystemSession ]->Oops! That didn’t quite work. There is still one piece missing in our new backend: we don’t yet have any “kernel bridges” in place that automatically fingerprint the internals of known kernels in order to find a suitable internal memory allocator we can use. This will also be needed to implement APIs such as Process.enumerateModules(), which will allow listing the loaded kernel modules/kexts. We could also locate the kernel’s process list and implement enumerate_processes(), so frida-ps works. And those are only a couple of examples… How about injecting frida-gadget into a user-space process? That would be super-useful for an embedded system where we want to avoid modifying the flash. Anyway, I digress 😊

So, on MCUs and unknown kernels you will have to tell Frida where, in physical memory, we may clobber if you want to use intrusive features such as RustModule, Interceptor in its inline hooking mode, Memory.alloc(), etc.

With that in mind, let’s retry our example, but this time we’ll set the FRIDA_BAREBONE_HEAP_BASE environment variable:

$ export FRIDA_BAREBONE_HEAP_BASE=0x48000000

$ frida -D barebone -p 0 -l kernhook2.js

____

/ _ | Frida 16.1.0 - A world-class dynamic instrumentation toolkit

| (_| |

> _ | Commands:

/_/ |_| help -> Displays the help system

. . . . object? -> Display information about 'object'

. . . . exit/quit -> Exit

. . . .

. . . . More info at https://frida.re/docs/home/

. . . .

. . . . Connected to GDB Remote Stub (id=barebone)

[Remote::SystemSession ]-> m

{

"hook": "0xffffff80080103e0"

}

[Remote::SystemSession ]-> $gdb.continue()Yay! 🎉 So now, in the terminal where we have QEMU running, let’s try accessing /proc/$pid/status three times, so the hooked function gets called:

# head -3 /proc/self/status

Name: head

Umask: 0022

State: R (running)

# head -3 /proc/self/status

Name: head

Umask: 0022

State: R (running)

# head -3 /proc/self/status

Name: head

Umask: 0022

State: R (running)Over in our REPL, we should see our hook() getting hit three times:

proc_pid_status() was called with x0=0xffffffc00d4bca00 x1=0xffffff8008608758

proc_pid_status() was called with x0=0xffffffc00d4bc780 x1=0xffffff8008608758

proc_pid_status() was called with x0=0xffffffc00d4bc780 x1=0xffffff8008608758It works! 🥳

There’s one important thing to note though: In our example we’re using println!(), and this actually causes the target to hit a breakpoint, so the host can read out the message passed to it, and bubble that up just like a console.log() from JavaScript. This means you should only use this feature for temporary debugging purposes, and throttle how often it’s called if on a hot code-path.

The next thing you might want to do is pass external symbols into your RustModule. For example if you want to call internal kernel functions from your Rust code. This is accomplished by declaring them like this:

extern "C" {

fn frobnicate(data: *const u8, len: usize);

}Then when constructing the RustModule, pass it in through the second argument:

const m = new RustModule(source, {

frobnicate: ptr('0xffffff8008084320'),

});For those of you familiar with our CModule API, this part is exactly the same. You can also use NativeCallback to implement portions host-side, in JavaScript, but this needs to be handled with care to avoid performance bottlenecks. Going in the opposite direction there is also NativeFunction, which you can use to call into your Rust code from JavaScript.

Last but not least, you may also want to import existing Rust crates from crates.io. This is also supported:

const m = new RustModule(source, {}, {

dependencies: [

'cstr_core = { version = "0.2.6", default-features = false }',

]

});What’s so exciting is that all of the Linux bits above all “just work” on Corellium as well. All you need to do is point FRIDA_BAREBONE_ADDRESS at the endpoint shown in Corellium’s UI under “Advanced Options” -> “gdb”.

Shout-out to the awesome folks at Corellium for their support while doing this. They even implemented new protocol features to improve interoperability 🔥

This new backend should be considered alpha quality for now, but I think it’s already capable of so many useful things that it would be a shame to keep it sitting on a branch.

You may notice that the JS APIs implemented only cover a subset, and not all features are available on non-arm64 targets yet. But all of this will improve as the backend matures. (Pull-requests are super-welcome!)

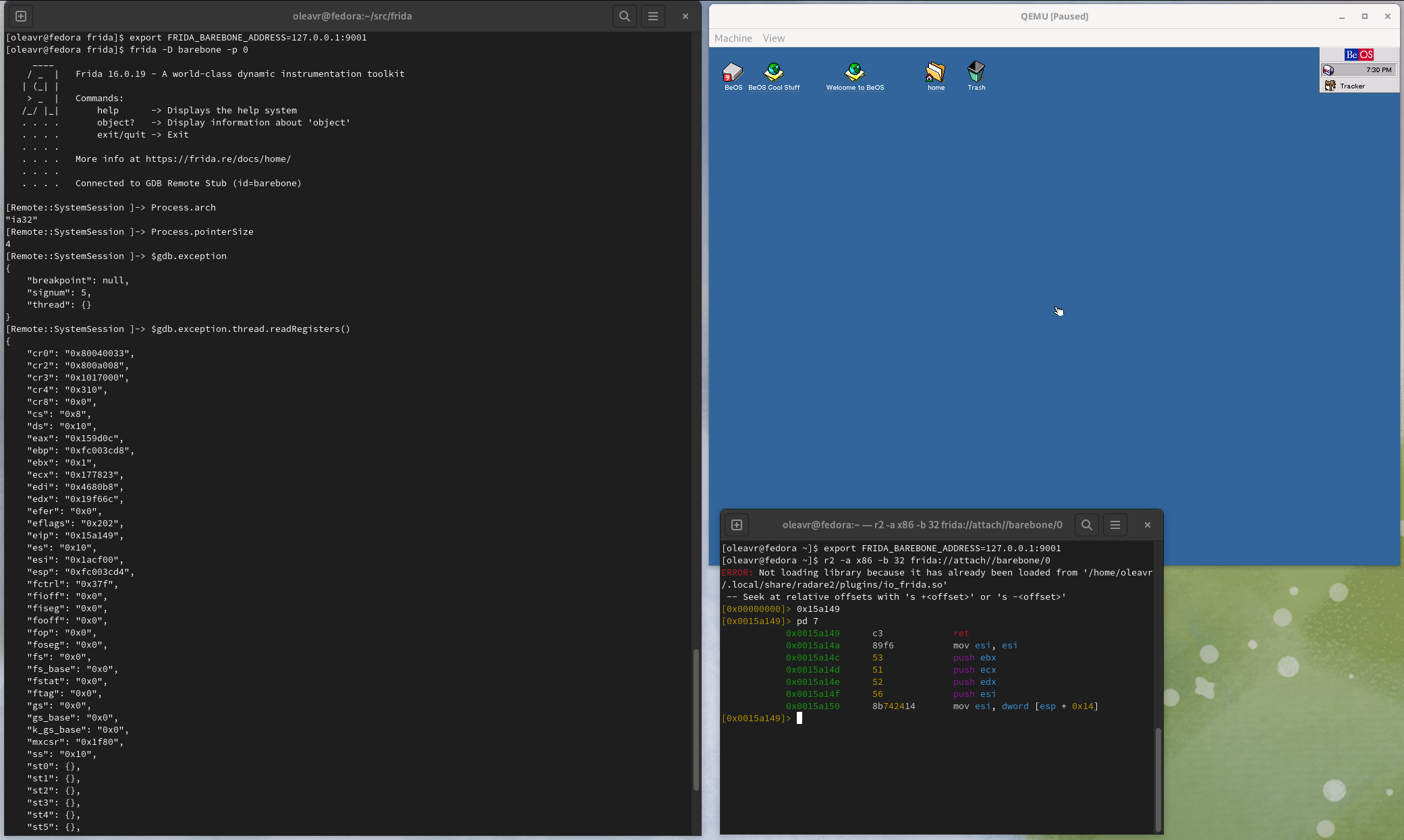

And as a fun aside, here’s Frida attached to the BeOS kernel:

There’s also a bunch of other exciting changes, so definitely check out the changelog below.

Enjoy!

Some exciting stability improvements this time around:

We solve these problems by:

Just a quick bug-fix release reviving support for Android x86/x86_64 systems with ARM emulation. This is still a blind spot in our CI, and I forgot all about it while working on the new Linux injector. Kudos to @stopmosk for promptly reporting and helping triage this regression.

Time for a bug-fix release with only one change: turns out the ARMv8 BTI interop introduced in 16.0.14 is problematic on Apple A12+ SoCs when running in arm64e mode, i.e. with pointer authentication enabled.

Kudos to @miticollo for reporting and helping triage the cause, and @mrmacete for digging further into it, brainstorming potential fixes, and implementing the fix. You guys rock! ❤️

Seeing as TypeScript 5.0 was released last month, and frida.Compiler was still at 4.9, we figured it’s time to upgrade it. So with this release we’re now shipping 5.0.4. The upgrade also revealed a couple of bugs in our V8-based runtime, and that our embedded frida-gum typings were slightly outdated.

Enjoy!

Here’s a release fixing two stability issues affecting users on Apple platforms:

More exciting bug-fixes:

Only a few bug-fixes this time around:

Lots of goodies this time around. One of them is our brand new Linux injector. This was a lot of fun, especially as it involved lots of pair-programming with @hsorbo.

We’re quite excited about this one. Frida now supports injecting into Linux containers, such as Flatpak apps. Not just that, it can finally inject code into processes without a dynamic linker present.

Another neat improvement is if you’re running Frida on Linux >= 3.17, you may notice that it no longer writes out any temporary files. This is accomplished through memfd and a reworked injector signaling mechanism.

Our new Linux injector has a fully asynchronous design, without any dangerous blocking operations that can result in deadlocks. It is also the first injector to support providing a control channel to the injected payload.

Down the road the plan is to implement this in our other backends as well, and make it part of our cross-platform Injector API. The thinking there is to make it easier to implement custom payloads.

There are also many other goodies in this release, so definitely check out the changelog below.

Enjoy!

This time we’re bringing you additional iOS 15 improvements, an even better frida.Compiler, brand new support for musl libc, and more. Do check out the changelog below for more details.

Enjoy!

The main theme of this release is improved support for jailbroken iOS. We now support iOS 16.3, including app listing and launching. Also included is improved support for iOS 15, and some regression fixes for older iOS versions.

There are also some other goodies in this release, so definitely check out the changelog below.

Enjoy!

This time we’ve focused on polishing up our macOS and iOS support. For those of you using spawn() and spawn-gating for early instrumentation, things are now in much better shape.

The most exciting change in this release is all about performance. Programs that would previously take a while to start when launched using Frida should now be a lot quicker to start. This long-standing bottleneck was so bad that an app with a lot of libraries could fail to launch due to Frida slowing down its startup too much.

Next up we have a fix for a long-standing reliability issue. Turns out our file-descriptors used for IPC did not have SO_NOSIGPIPE set, so we could sometimes end up in a situation where either Frida or the target process terminated abruptly, and the other side would end up getting zapped by SIGPIPE while trying to write().

The previous release introduced some bold new changes to support injecting into hardened targets. Since then @hsorbo and me dug back into our recent GLib kqueue() patch and fixed some rough edges. We also fixed a regression where attaching to hardened processes through usbmuxd would fail with “connection closed”.

On the Linux and Android side of things, some of you may have noticed that thread enumeration could fail randomly, especially inside busy processes. That issue has now finally been dealt with.

Also, thanks to @drosseau we also have an error-handling improvement that should avoid some confusion when things fail in 32-/64-bit cross-arch builds.

That is all this time around. Enjoy!

It’s been a busy week. Let’s dive in.

This week @hsorbo and me spent some days trying to get Frida working better in sandboxed environments. Our goal was to be able to get Frida into Apple’s SpringBoard process on iOS. But to make things a little interesting, we figured we’d start with imagent, the daemon that handles the iMessage protocol. It has been hardened quite a bit in recent OS versions, and Frida was no longer able to attach to it.

So we first started out with this daemon on macOS, just to make things easier to

debug. After finding the daemon’s sandbox profile at

/System/Library/Sandbox/Profiles/com.apple.imagent.sb, it was hard to miss the

syscall policy. It disallows all syscalls by default, and carefully enables some

groups of syscalls, plus some specific ones that it also needs.

We then discovered that Frida’s use of the pipe() syscall was the first hurdle. This code is not actually in Frida itself, but in GLib, the excellent library that Frida uses for data structures, cross-platform threading primitives, event loop, etc. It uses pipe() to implement a primitive needed for its event loop. More precisely, it uses this primitive to wake up the event loop’s thread in case it is blocking in a poll()-style syscall.

Anyway, we noticed that kqueue() is part of the groups of syscalls explicitly allowed. Given that Apple’s kqueue() supports polling file-descriptors and Mach ports at the same time, among other things, it’s likely to be needed in a lot of places, and thus allowed by a broad range of sandbox profiles. It is also a great fit for us, since EVFILT_USER means there is a way to wake up the event loop’s thread. Not just that, but it doesn’t cost us a single file-descriptor.

After lots of coffee and fun pair-programming, we arrived at a patch that switches GLib’s event loop over to kqueue() on OSes that support it. This got us to the next hurdle: Frida is using socket APIs for file-descriptor passing, part of the child-gating feature used for instrumenting children of the current process. However, since hardened system services aren’t likely to be allowed to do things like fork() and execve(), it is fine to simply degrade this part of our functionality. That was tackled next, and boom… Frida is finally able to attach to imagent. 🎉 Yay!

Next up we moved over to iOS and took it for a spin there. Much to our surprise, Frida could attach to SpringBoard right out of the gate. Later we tried notifyd and profiled, and could attach to those too. Even on latest iOS 16. But, there’s still work to do, as Frida cannot yet attach to imagent and WebContent on iOS. This is exciting progress, though.

While doing all of this we also tracked down a crash on iOS where frida-server would get killed due to EXC_GUARD during injection on iOS >= 15. That has now also been fixed, just in time for the release!

Another exciting piece of news is that @mrmacete improved our DebugSymbol API to consistently provide the full path instead of only the filename. This was a long-standing inconsistency across our different platform backends. While at it he also exposed the column, so you also get that in addition to the line number.

Last but not least it’s worth mentioning an exciting new improvement in Interceptor. For those of you using it from C, there’s now replace_fast() to complement replace(). This new fast variant emits an inline hook that vectors directly to your replacement. You can still call the original if you want to, but it has to be called through the function pointer that Interceptor gives you as an optional out-parameter. It also cannot be combined with attach() for the same target. It is a lot faster though, so definitely good to be aware of when needing to hook functions in hot code-paths.

That’s all this time. Enjoy!

Turns out a serious stability regression made it into Frida 16.0.3, where our out-of-process dynamic linker for Apple OSes could end up crashing the target process. This is especially disastrous when the target process is launchd, as it results in a kernel panic. Thanks to the amazing work of @mrmacete, this embarrassing regression is now fixed. 🎉 Enjoy!

Quick bug-fix release this time around: the Android system_server instrumentation adjustments should now work reliably on all systems.

Here’s a brand new release just in time for the weekend! 🎉 A few critical stability fixes this time around.

Enjoy!

Some cool new things this time. Let’s dive right in.

One of the exciting contributions this time around came from @tmm1, who opened a whole bunch of pull-requests adding support for tvOS. Yay! As part of landing these I took the opportunity to add support for watchOS as well.

This also turned out to be a great time to simplify the build system, getting rid of complexity introduced to support non-Meson build systems such as autotools. So as part of this clean-up we now have separate binaries for Simulator targets such as the iOS Simulator, tvOS Simulator, etc. Previously we only supported the x86_64 iOS Simulator, and now arm64 is covered as well.

Earlier this week, @hsorbo and I did some fun and productive pair-programming where we tackled the dynamic linker changes in Apple’s latest OSes. Those of you using Frida on i/macOS may have noticed that spawn() stopped working on macOS 13 and iOS 16.

This was a fun one. It turns out that the dyld binary on the filesystem now looks in the dyld_shared_cache for a dyld with the same UUID as itself, and if found vectors execution there instead. Explaining why this broke Frida’s spawn() functionality needs a little context though, so bear with me.

Part of what Frida does when you call attach() is to inject its agent, if it hasn’t already done this. Before performing the injection however, we check if the process is sufficiently initialized, i.e. whether libSystem has been initialized.

When this isn’t the case, such as after spawn(), where the target is suspended at dyld’s entrypoint, Frida basically advances the main thread’s execution until it reaches a point where libSystem is ready. This is typically accomplished using a hardware breakpoint.

So because the new dyld now chains to another copy of itself, inside the dyld_shared_cache, Frida was placing a breakpoint in the version mapped in from the filesystem, instead of the one in the cache. Obviously that never got hit, so we would end up timing out while waiting for this to happen.

The fix was reasonably straight-forward though, so we managed to squeeze this one into the release in the last minute.

The frida.Compiler just got a whole lot better, and now supports additional configuration through tsconfig.json, as well as using local frida-gum typings.

The V8 debugger integration was knocked out by the move to having one V8 Isolate per script, which was a delicate refactoring needed for V8 snapshot support. This is now back in working order.

One of the heavier lifts this time around was clearly dependency upgrades, where most of our dependencies have now been upgraded: from Capstone supporting ARMv9.2 to latest GLib using PCRE2, etc.

The move to PCRE2 means our Memory.scan() regex support just got upgraded, since GLib was previously using PCRE1. We don’t yet enable PCRE2’s JIT on any platforms though, but this would be an easy thing to improve down the road.

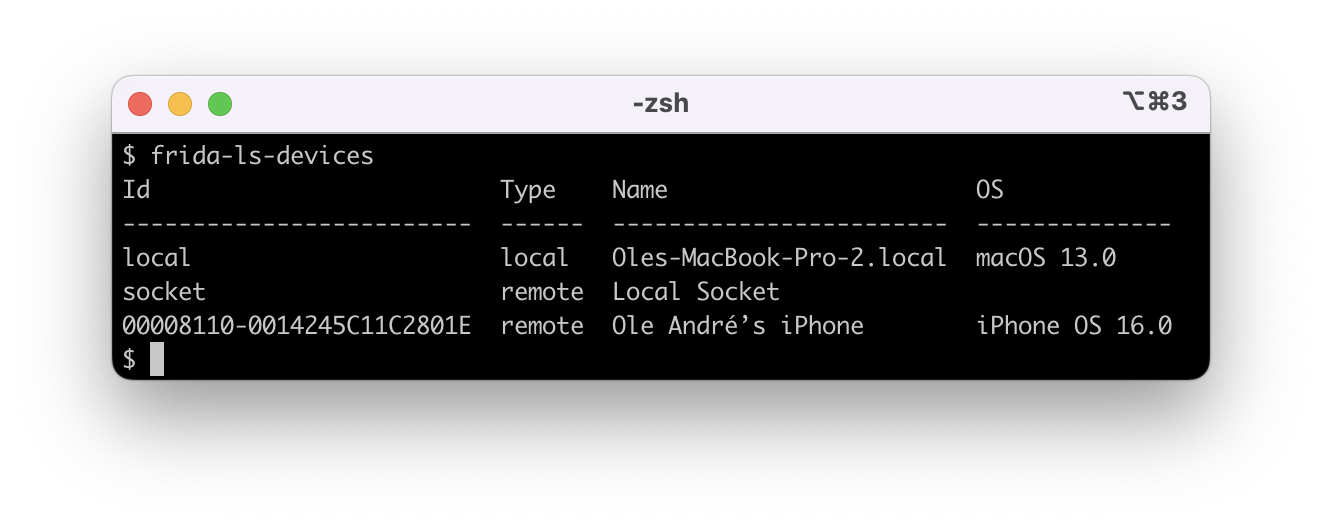

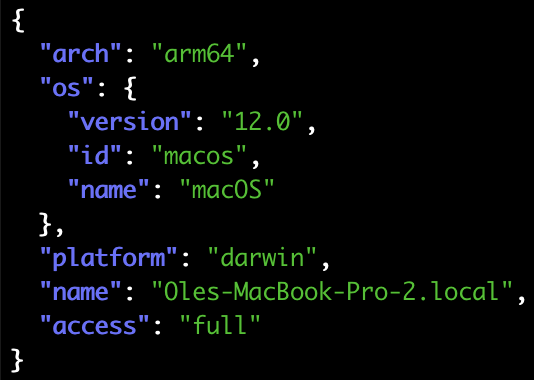

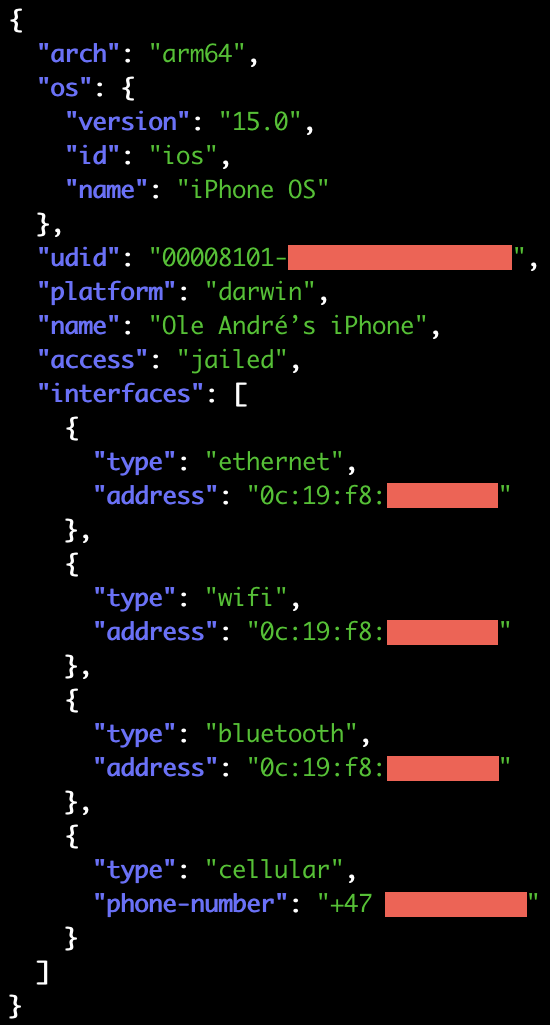

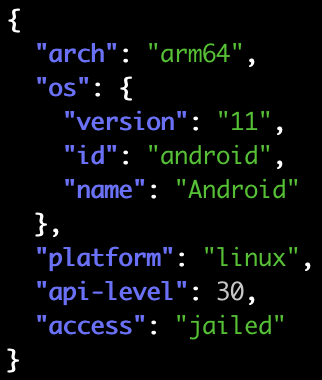

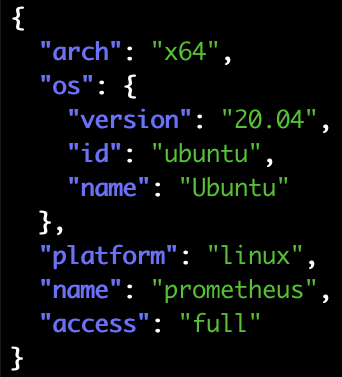

We also have a brand new release of frida-tools, which thanks to @tmm1 has a new and exciting feature. The frida-ls-devices tool now displays higher fidelity device names, with OS name and version displayed in a fourth column:

To upgrade:

$ pip3 install -U frida frida-toolsThere are also some other goodies in this release, so definitely check out the changelog below.

Enjoy!

It’s Friday! Here’s a brand new release with lots of improvements:

Two small but significant bugfixes this time around:

Hope some of you are enjoying frida.Compiler! In case you have no idea what that is, check out the 15.2.0 release notes.

Back in 15.2.0 there was something that bothered me about frida.Compiler: it would take a few seconds just to compile a tiny “Hello World”, even on my i9-12900K Linux workstation:

$ time frida-compile explore.ts -o _agent.js

real 0m1.491s

user 0m3.016s

sys 0m0.115sAfter a lot of profiling and insane amounts of yak shaving, I finally arrived at this:

$ time frida-compile explore.ts -o _agent.js

real 0m0.325s

user 0m0.244s

sys 0m0.109sThat’s quite a difference! This means on-the-fly compilation use-cases such as

frida -l explore.ts are now a lot smoother. More importantly though, it means

Frida-based tools can load user scripts this way without making their users

suffer through seconds of startup delay.

You might be wondering how we made our compiler so quick to start. If you take a peek under the hood, you’ll see that it uses the TypeScript compiler. This is quite a bit of code to parse and run at startup. Also, loading and processing the .d.ts files that define all of the types involved is actually even more expensive.

The first optimization that we implemented back in 15.2 was to simply use our V8 runtime if it’s available. That alone gave us a nice speed boost. However, after a bit of profiling it was clear that V8 realized that it’s dealing with a heavy workload once we start processing the .d.ts files, and that resulted in it spending a big chunk of time just optimizing the TypeScript compiler’s code.

This reminded me of a really cool V8 feature that I’d noticed a long time ago: custom startup snapshots. Basically if we could warm up the TypeScript compiler ahead of time and also pre-create all of the .d.ts source files when building Frida, we could snapshot the VM’s state at that point and embed the resulting startup snapshot. Then at runtime we can boot from the snapshot and hit the ground running.

As part of implementing this, I extended GumJS so a snapshot can be passed to create_script(), together with the source code of the agent. There is also snapshot_script(), used to create the snapshot in the first place.

For example:

import frida

session = frida.attach(0)

snapshot = session.snapshot_script("const example = { magic: 42 };",

warmup_script="true",

runtime="v8")

print("Snapshot created! Size:", len(snapshot))This snapshot could then be saved to a file and later loaded like this:

script = session.create_script("console.log(JSON.stringify(example));",

snapshot=snapshot,

runtime="v8")

script.load()Note that snapshots need to be created on the same OS/architecture/V8 version as they’re later going to be loaded on.

Another exciting bit of news is that we’ve upgraded V8 to 10.x, which means we get to enjoy the latest VM refinements and JavaScript language features. Considering that our last upgrade was more than two years ago, it’s definitely a solid upgrade this time around.

As you may recall from the 15.1.15 release notes, we were closer than ever to reaching the milestone where all of Frida can be built with a single build system. The only component left at that point was V8, which we used to build using Google’s GN build system. I’m happy to report that we have finally reached that milestone. We now have a brand new Meson build system for V8. Yay!

There’s also a bunch of other exciting changes, so definitely check out the changelog below.

Enjoy!

Two more improvements, just in time for the weekend:

Two small but significant bugfixes this time around:

Super-excited about this one. What I’ve been wanting to do for years is to streamline Frida’s JavaScript developer experience. As a developer I may start out with a really simple agent, but as it grows I start to feel the pain.

Early on I may want to split up the agent into multiple files. I may also want to use some off-the-shelf packages from npm, such as frida-remote-stream. Later I’d want code completion, inline docs, type checking, etc., so I move the agent to TypeScript and fire up VS Code.

Since we’ve been piggybacking on the amazing frontend web tooling that’s already out there, we already have all the pieces of the puzzle. We can use a bundler such as Rollup to combine our source files into a single .js, we can use @frida/rollup-plugin-node-polyfills for interop with packages from npm, and we can plug in @rollup/plugin-typescript for TypeScript support.

That is quite a bit of plumbing to set up over and over though, so I eventually created frida-compile as a simple tool that does the plumbing for you, with configuration defaults optimized for what makes sense in a Frida context. Still though, this does require some boilerplate such as package.json, tsconfig.json, and so forth.

To solve that, I published frida-agent-example, a repo that can be cloned and used as a starting point. That is still a bit of friction, so later frida-tools got a new CLI tool called frida-create. Anyway, even with all of that, we’re still asking the user to install Node.js and deal with npm, and potentially also feel confused by the .json files just sitting there.

Then it struck me. What if we could use frida-compile to compile frida-compile into a self-contained .js that we can run on Frida’s system session? The system session is a somewhat obscure feature where you can load scripts inside of the process hosting frida-core. For example if you’re using our Python bindings, that process would be the Python interpreter.

Once we are able to run that frida-compile agent inside of GumJS, we can communicate with it and turn that into an API. This API can then be exposed by language bindings, and frida-tools can consume it to give the user a frida-compile CLI tool that doesn’t require Node.js/npm to be installed. Tools such as our REPL can seamlessly use this API too if the user asks it to load a script with a .ts extension.

And all of that is precisely what we have done! 🥳

Here’s how easy it is to use it from Python:

import frida

compiler = frida.Compiler()

bundle = compiler.build("agent.ts")The bundle variable is a string that can be passed to create_script(), or written to a file.

Running that example we might see something like:

Traceback (most recent call last):

File "/home/oleavr/src/explore.py", line 4, in <module>

bundle = compiler.build("agent.ts")

File "/home/oleavr/.local/lib/python3.10/site-packages/frida/core.py", line 76, in wrapper

return f(*args, **kwargs)

File "/home/oleavr/.local/lib/python3.10/site-packages/frida/core.py", line 1150, in build

return self._impl.build(entrypoint, **kwargs)

frida.NotSupportedError: compilation failedThat makes us wonder why it failed, so let’s add a handler for the diagnostics signal:

import frida

def on_diagnostics(diag):

print("on_diagnostics:", diag)

compiler = frida.Compiler()

compiler.on("diagnostics", on_diagnostics)

bundle = compiler.build("agent.ts")And suddenly it’s all making sense:

on_diagnostics: [{'category': 'error', 'code': 6053,

'text': "File '/home/oleavr/src/agent.ts' not "

"found.\n The file is in the program "

"because:\n Root file specified for"

" compilation"}]

…We forgot to actually create the file! Ok, let’s create agent.ts:

console.log("Hello from Frida:", Frida.version);And let’s also write that script to a file:

import frida

def on_diagnostics(diag):

print("on_diagnostics:", diag)

compiler = frida.Compiler()

compiler.on("diagnostics", on_diagnostics)

bundle = compiler.build("agent.ts")

with open("_agent.js", "w", newline="\n") as f:

f.write(bundle)If we now run it, we should have an _agent.js ready to go:

$ cat _agent.js

📦

175 /explore.js.map

39 /explore.js

✄

{"version":3,"file":"explore.js","sourceRoot":"/home/oleavr/src/","sources":["explore.ts"],"names":[],"mappings":"AAAA,OAAO,CAAC,GAAG,CAAC,SAAS,KAAK,CAAC,OAAO,GAAG,CAAC,CAAC"}

✄

console.log(`Hello ${Frida.version}!`);This weird-looking format is how GumJS’ allows us to opt into the new ECMAScript Module (ESM) format where code is confined to the module it belongs to instead of being evaluated in the global scope. What this also means is we can load multiple modules that import/export values. The .map files are optional and can be omitted, but if left in they allow GumJS to map the generated JavaScript line numbers back to TypeScript in stack traces.

Anyway, let’s take _agent.js for a spin:

$ frida -p 0 -l _agent.js

____

/ _ | Frida 15.2.0 - A world-class dynamic instrumentation toolkit

| (_| |

> _ | Commands:

/_/ |_| help -> Displays the help system

. . . . object? -> Display information about 'object'

. . . . exit/quit -> Exit

. . . .

. . . . More info at https://frida.re/docs/home/

. . . .

. . . . Connected to Local System (id=local)

Attaching...

Hello 15.2.0!

[Local::SystemSession ]->It works! Now let’s try refactoring it to split the code into two files:

import { log } from "./log.js";

log("Hello from Frida:", Frida.version);export function log(...args: any[]) {

console.log(...args);

}If we now run our example compiler script again, it should produce a slightly more interesting-looking _agent.js:

📦

204 /agent.js.map

72 /agent.js

199 /log.js.map

58 /log.js

✄

{"version":3,"file":"agent.js","sourceRoot":"/home/oleavr/src/","sources":["agent.ts"],"names":[],"mappings":"AAAA,OAAO,EAAE,GAAG,EAAE,MAAM,UAAU,CAAC;AAE/B,GAAG,CAAC,mBAAmB,EAAE,KAAK,CAAC,OAAO,CAAC,CAAC"}

✄

import { log } from "./log.js";

log("Hello from Frida:", Frida.version);

✄

{"version":3,"file":"log.js","sourceRoot":"/home/oleavr/src/","sources":["log.ts"],"names":[],"mappings":"AAAA,MAAM,UAAU,GAAG,CAAC,GAAG,IAAW;IAC9B,OAAO,CAAC,GAAG,CAAC,GAAG,IAAI,CAAC,CAAC;AACzB,CAAC"}

✄

export function log(...args) {

console.log(...args);

}Loading that into the REPL should yield the exact same result as before.

Let’s turn our toy compiler into a tool that loads the compiled script, and recompiles whenever a source file changes on disk:

import frida

import sys

session = frida.attach(0)

script = None

def on_output(bundle):

global script

if script is not None:

print("Unloading old bundle...")

script.unload()

script = None

print("Loading bundle...")

script = session.create_script(bundle)

script.on("message", on_message)

script.load()

def on_diagnostics(diag):

print("on_diagnostics:", diag)

def on_message(message, data):

print("on_message:", message)

compiler = frida.Compiler()

compiler.on("output", on_output)

compiler.on("diagnostics", on_diagnostics)

compiler.watch("agent.ts")

sys.stdin.read()And off we go:

$ python3 explore.py

Loading bundle...

Hello from Frida: 15.2.0If we leave that running and then edit the source code on disk we should see some new output:

Unloading old bundle...

Loading bundle...

Hello from Frida version: 15.2.0Yay!

We can also use frida-tools’ new frida-compile CLI tool:

$ frida-compile agent.ts -o _agent.jsIt also supports watch mode:

$ frida-compile agent.ts -o _agent.js -wOur REPL is also powered by the new frida.Compiler:

$ frida -p 0 -l agent.ts

____

/ _ | Frida 15.2.0 - A world-class dynamic instrumentation toolkit

| (_| |

> _ | Commands:

/_/ |_| help -> Displays the help system

. . . . object? -> Display information about 'object'

. . . . exit/quit -> Exit

. . . .

. . . . More info at https://frida.re/docs/home/

. . . .

. . . . Connected to Local System (id=local)

Compiled agent.ts (1428 ms)

Hello from Frida version: 15.2.0

[Local::SystemSession ]->Shoutout to @hsorbo for the fun and productive pair-programming sessions where we were working on frida.Compiler together! 🙌

There are also quite a few other goodies in this release, so definitely check out the changelog below.

Enjoy!

A couple of exciting new things in this release.

Those of you using our JavaScript File API may have noticed that it supports writing to the given file, but there was no way to read from it. This is now supported.

For example, to read each line of a text-file as a string:

const f = new File('/etc/passwd', 'r');

let line;

while ((line = f.readLine()) !== '') {

console.log(`Read line: ${line.trimEnd()}`);

}(Note that this assumes that the text-file is UTF-8-encoded. Other encodings are not currently supported.)

You can also read a certain number of bytes at some offset:

const f = new File('/var/run/utmp', 'rb');

f.seek(0x2c);

const data = f.readBytes(3);

const str = f.readText(3);The argument may also be omitted to read the rest of the file. But if you’re just looking to read a text file in one go, there’s an easier way:

const text = File.readAllText('/etc/passwd');Reading a binary file is just as easy:

const bytes = File.readAllBytes('/var/run/utmp');(Where bytes is an ArrayBuffer.)

Sometimes you may also want to dump a string into a text file:

File.writeAllText('/tmp/secret.txt', 'so secret');Or perhaps dump an ArrayBuffer:

const data = args[0].readByteArray(256);

File.writeAllBytes('/tmp/mystery.bin', data);Going back to the example earlier, seek() also supports relative offsets:

f.seek(7, File.SEEK_CUR);

f.seek(-3, File.SEEK_END);Retrieving the current file offset is just as easy:

const offset = f.tell();The other JavaScript API addition this time around is for when you want to compute checksums. While this could be implemented in JavaScript entirely in “userland”, we do get it fairly cheap since Frida depends on GLib, and it already provides a Checksum API out of the box. All we needed to do was expose it.

Putting it all together, this means we can read a file and compute its SHA-256:

const utmp = File.readAllBytes('/var/run/utmp');

const str = Checksum.compute('sha256', utmp);Or, for more control:

const checksum = new Checksum('sha256');

checksum.update(File.readAllText('/etc/hosts'));

checksum.update(File.readAllBytes('/var/run/utmp'));

console.log('Result:', checksum.getString());

console.log(hexdump(checksum.getDigest(), { ansi: true }));(You can learn more about this API from our TypeScript bindings.)

There are also a few other goodies in this release, so definitely check out the changelog below.

Enjoy!

It appears I should have had some more coffee this morning, so here’s another release to actually fix this broken fix back in 15.1.25:

- java: (android) Prevent ART from compiling replaced methods

Turns out the kAccCompileDontBother constant changed in Android 8.1. It also didn’t exist before 7.0. Oops! This release fixes it, for real this time 😊

Quite a few exciting bits in this release. Let’s dive right in.

Some great news for those of you using Frida on 32- and 64-bit ARM. Up until now, we have only exposed the CPU’s integer registers, but as of this release, FPU/vector registers are also available! 🎉

For 32-bit ARM this means q0 through q15, d0 through d31, and s0 through s31. As for 64-bit ARM they’re q0 through q31, d0 through d31, and s0 through s31. If you’re accessing these from JavaScript, the vector properties are represented using ArrayBuffer, whereas for the others we’re using the number type.

Our existing Java.backtrace() API now provides a couple of new properties in the returned frames, which now also expose methodFlags and origin.

I finally plugged a memory leak in our RPC server-side code. This was introduced by me in 15.1.10 when implementing an optimization in the Vala compiler’s code generation for DBus reply handling. Shoutout to @rev1si0n for reporting and helping track down this regression!

There are also some other goodies in this release, so definitely check out the changelog below.

Enjoy!

Only one change this time, but it’s an important one for those of you using Frida on Android: Our Java method hooking implementation was crashing in some cases, where we would pick a scratch register that conflicted with the generated code. This is now fixed.

Enjoy!

The main theme of this release is OS support, where we’ve fixed some rough edges on Android 12, and introduced preliminary support for Android 13. While working on frida-java-bridge I also found myself needing some of the JVMTI code in the JVM-specific backend. Those bits are now shared, and the JVM-specific code is in better shape, with brand new support for JDK 17.

We have also improved stability in several places, and made it easier to use the CodeWriter APIs safely. Portability has also been improved, where our QuickJS-based JavaScript runtime finally works when cross-compiled for big-endian from a little-endian build machine, and vice versa.

To learn more, be sure to check out the changelog below.

Enjoy!

Turns out the major surgery that Gum went through in 15.1.15 introduced some error-handling regressions. Errors thrown vs. actually expected by the Vala code in frida-core did not match, which resulted in the process crashing instead of a friendly error bubbling up to e.g. the Python bindings. That is now finally taken care of. I wish we had noticed it sooner, though — we’re clearly lacking test coverage in this area.

Beside the error-handling fixes, we’re also including a build system fix for incremental build issues. Kudos to Londek for this nice contribution.

Enjoy!

Turns out 15.1.18 had a release automation bug that resulted in stale Python binding artifacts getting uploaded.

To make this release a little happier, I also threw in a Stalker improvement for x86/64, where the clone3 syscall is now handled as well. This was caught by Stalker’s test-suite on some systems.

Enjoy!

Lots of improvements all over the place this time. Many stability improvements.

I have continued improving our CI and build system infrastructure. QNX and MIPS support are back from the dead, after long-standing regressions. These went unnoticed due to lack of CI coverage. We now have CI in place to ensure that won’t happen again.

Do check out the changelog below for a full overview of what’s new.

Enjoy!

One notable improvement in this release is that Java.backtrace() got a major

overhaul. It is now lazy and >10x faster. I have also refined its API,

which is now considered stable.

While working on frida-java-bridge, I optimized how Env objects are handled, so we can recycle an existing instance if we already have one for the current thread.

The remaining goodies are covered by the changelog below, so definitely check it out.

Enjoy!

This time we’re bringing you two bugfixes and one new feature, just in time for the weekend.

Gum used to depend on GIO, but that dependency was removed in the previous release. The unfortunate result of that change was that agent and gadget no longer tore down GIO, as they were relying on Gum’s teardown code doing that. What this meant was that we were leaving threads behind, and that is never a good thing. So that’s the first bugfix.

Also in the previous release, over in our Python bindings, setup.py went through some heavy changes. We improved the .egg download logic, but managed to break the local .egg logic. That’s the second bugfix.

Onto the new feature. For those of you using Gum’s JavaScript bindings, GumJS,

we now support console.count() and console.countReset(). These are

implemented by browsers and Node.js, and make it easy to count the number of

times a given label has been seen. Kudos to @yotamN for this nice

contribution.

Enjoy!

Quite a few exciting bits in this release. Let’s dive right in.

Our ambition is to support all platforms that our users care about. In this release I wanted to plant the first seed in expanding that to BSDs. So now I’m thrilled to announce that Frida finally also supports FreeBSD! 🎉

For now we only support x86_64 and arm64, but expanding to the remaining architectures is straight-forward in case anybody is interested in helping out.

The porting effort resulted in several architectural refinements and improved robustness for ELF-based OSes in general. It also gave me some ideas on how to improve our Linux injector to support injecting into containers, which is something I’d like to do down the road.

Back in 15.1.10, Stalker got a massive performance boost on x86/64. In this release those same ideas have been applied to our arm64 backend. This includes improved locality, better inline caches, etc. I’m told we were able to beat or match QEMU in FuzzBench back then, and now we should be in good shape on arm64 as well. We also managed to improve stability while at it. Exciting!

Back in 14.1, @meme wired up build system support for GObject Introspection. This means we have a machine-readable description of all of our APIs, which lets us piggyback on existing language binding infrastructure, and even get auto-generated reference docs for free.

This release adds a lot of annotations and doc-strings to Gum, and we are now closer than ever to having auto-generated reference docs. Still some work left to do before it makes sense to publish the generated documentation, but it’s not far off. If anyone is interested in pitching in, check out Gum’s CI and have a look at the warnings output by GObject Introspection.

One thing I really like about the Meson build system is its support for subprojects. Gum now supports being used as a subproject. Some of you may already be consuming Gum through its devkit binaries, and now you have a brand new option that is even easier.

The main advantage over using a devkit is that everything is built from source, so it’s easy to experiment with the code.

Let’s say we have a file hello.c that contains:

#define _GNU_SOURCE

#include <dlfcn.h>

#include <fcntl.h>

#include <gum.h>

#include <stdio.h>

#include <unistd.h>

static int (* open_impl) (const char * path, int oflag, ...);

static int replacement_open (const char * path, int oflag, ...);

int

main (int argc,

char * argv[])

{

gum_init ();

GumInterceptor * interceptor = gum_interceptor_obtain ();

gum_interceptor_begin_transaction (interceptor);

open_impl = dlsym (RTLD_DEFAULT, "open");

gum_interceptor_replace (interceptor, open_impl, replacement_open,

NULL, NULL);

gum_interceptor_end_transaction (interceptor);

close (open_impl ("/etc/hosts", O_RDONLY));

close (open_impl ("/etc/fstab", O_RDONLY));

return 0;

}

static int

replacement_open (const char * path,

int oflag,

...)

{

printf ("!!! open(\"%s\", 0x%x)\n", path, oflag);

return open_impl (path, oflag);

}To build it we can create a meson.build next to it with the following:

project('hello', 'c')

gum = dependency('frida-gum-1.0')

executable('hello', 'hello.c', dependencies: [gum])And create subprojects/frida-gum.wrap containing:

[wrap-git]

url = https://github.com/frida/frida-gum.git

revision = main

depth = 1

[provide]

dependency_names = frida-gum-1.0, frida-gum-heap-1.0, frida-gum-prof-1.0, frida-gumjs-1.0In case you don’t have Meson and Ninja already installed, run

pip install meson ninja.

Then, to build and run:

$ meson setup build

$ meson compile -C build

$ ./build/hello

!!! open("/etc/hosts", 0x0)

!!! open("/etc/fstab", 0x0)We put a lot of effort into making sure that Frida can scale from desktops all the way down to embedded systems. In this release I spent some time profiling our binary footprint, and based on this I ended up making a slew of tweaks and build options to reduce our footprint.

I was curious how small I could make a Gum Hello World program that only uses Interceptor. The end result was measured on 32-bit ARM w/ Thumb instructions, where Gum and its dependencies are statically linked, and the only external dependency is the system’s libc.

The result was as small as 55K (!), and that made me really excited. What I did was to introduce new build options in Gum, GLib, and Capstone. For Gum we now support a “diet” mode where we don’t make use of GObject and only offer a plain C API. This means it won’t support GObject Introspection and fancy language bindings. It also means we don’t offer the full Gum API, but that is something that can be expanded on in the future.

Similarly for GLib there is also a new “diet” mode, and boils down to disabling its slice allocator, debugging features, and a few other minor tweaks like that.

As for Capstone, I ended up introducing a new “profile” option that can be set to “tiny”. The result of doing so is that Capstone only understands enough of the instruction set to determine each instruction’s length, and provide some details on position-dependent instructions. The idea is to only support what our Relocator implementations need, as those do most of the heavy lifting behind Interceptor and Stalker.

While I wouldn’t recommend using these build options unless you really need a footprint that small, it’s good to be aware of what’s possible. We also offer other, less extreme options. Read more in our footprint docs.

Something that has been bothering me for as long as Frida has existed, is that building Frida involves dealing with multiple build systems. While we do of course try to hide that complexity behind scripts/makefiles, we are inevitably going to have unhappy users who find themselves trying to figure out why Frida is not building for them.

Some are also interested in cross-compiling Frida for a slightly different libc, toolchain, or what have you. They may even be looking to add support for an OS we don’t yet support. Chances are that we’re going to demotivate them the moment they realize they need to deal with four different build-systems: Meson, autotools, custom Perl scripts (OpenSSL), and GN (V8).

As we are happy users of Meson, my goal is to “Mesonify all the things!”. With this release, we have now finally reached the point where virtually all of our required dependencies are built using Meson. The only exception is V8, but we will hopefully also build that with Meson someday. (Spoiler from the future: Frida 16 will get us there!)

There’s also a bunch of other exciting changes, so definitely check out the changelog below.

Enjoy!

Introducing the brand new Swift bridge! Now that Swift has been ABI-stable since version 5, this long-awaited bridge allows Frida to play nicely with binaries written in Swift. Whether you consider Swift a static or a dynamic language, one thing is for sure, it just got a lot more dynamic with this Frida release.

Probably the first thing a reverser does when they start reversing a binary is

getting to know the different data structures that the binary defines. So it

made most sense to start by building the Swift equivalent of the ObjC.classes

and ObjC.protocols APIs. But since Swift has other first-class types,

i.e. structs and enums, and since the Swift runtime doesn’t offer reflection

primitives, at least not in the sense that Objective-C does, it meant we had to

dig a little deeper.

Luckily for us, the Swift compiler emits metadata for each type

defined by the binary. This metadata is bundled in a

TargetTypeContextDescriptor C++ struct, defined in

include/swift/ABI/Metadata.h at the time of writing. This data structure

includes the type name, its fields, its methods (if applicable,) and other useful

data depending on the type at hand. These data structures are pointed to by

relative pointers (defined in include/swift/Basic/RelativePointer.h.) In

Mach-O binaries, these are stored in the __swift5_types section.

So to dump types, Frida basically iterates over these data structures and parses them along the way, very similar to what dsdump does, except that you don’t have to build the Swift compiler in order to tinker with it.

Frida also has the advantage of being able to probe into

internal Apple dylibs written in Swift, and that’s because we don’t need to

parse the dyld_shared_cache thanks to the private getsectiondata API, which

gives us section offsets hassle-free.

Once we have the metadata, we’re able to easily create JavaScript wrappers for object instances and values of different types.

To be on par with the Objective-C bridge, the Swift bridge has to support calling Swift functions, which also proved to be not as straight forward.

Swift defines its own calling convention, swiftcall, which, to put it

succinctly, tries to be as efficient as possible. That means, not wasting load

and store instructions on structs that are smaller than 4 registers-worth of

size. That is, to pass those kinds of structs directly in registers. And since

that could quickly over-book our precious 8 argument registers

(on AARCH64 x0-x7), it doesn’t use the first register for the self

argument. It also defines an error register where callees can store errors

which they throw.

What we just described above is termed “physical lowering” in the Swift compiler docs, and it’s implemented by the back-end, LLVM.

The process that precedes physical lowering is termed “semantic lowering,” which is the compiler front-end figuring out who “owns” a value and whether it’s direct or indirect. Some structs, even though they might be smaller than 4 registers, have to be passed indirectly, because, for example, they are generic and thus their exact memory layout is not known at compile time, or because they include a weak reference that has to be in-memory at all times.

We had to implement both semantic and physical lowering in order to be able

to call Swift functions. Physical lowering is implemented using JIT-compiled

adapter functions (thanks to the Arm64Writer API) that does the necessary

SystemV-swiftcall translation. Semantic lowering is implemented by utilizing

the type’s metadata to figure out whether we should pass a value directly or

not.

The compiler docs are a great resource to learn more about the calling convention.

Because Swift passes structs directly in registers, there isn’t a 1-to-1 mapping between registers and actual arguments.

And now that we have JavaScript wrappers for types, and are able to call Swift

functions from the JS runtime, a good next step would be extending Interceptor

to support Swift functions.

For functions that are not stripped, we use a simple regex to parse argument types and names, same for return values. After parsing them we retrieve the type metadata, figure out the type’s layout, then simply construct JS wrappers for each argument, which we pass the Swift argument value, however many registers it occupies.

Note that the bridge is still very early in development, and so:

Refer to the documentation for an up-to-date resource on the current API.

Enjoy!

swiftcall calling convention from the

JavaScript runtime.parameters.config. Thanks @mrmacete!So much has changed. Let’s kick things off with the big new feature that guided most of the other changes in this release:

Earlier this year @insitusec and I were brainstorming ways we could simplify distributed instrumentation use-cases. Essentially ship a Frida Gadget that’s “hollow”, where application-specific instrumentation is provided by a backend.

One way one could implement this is by using the Socket.connect() JavaScript API, and then define an application-specific signaling protocol over which the code is loaded, before handing it off to the JavaScript runtime.

But this way of doing things does quickly end up with quite a bit of boring glue code, and existing tools such as frida-trace won’t actually be usable in such a setup.

That’s when @insitusec suggested that perhaps Frida’s Gadget could offer an inverse counterpart to its Listen interaction. So instead of it being a server that exposes a frida-server compatible interface, it could be configured to act as a client that connects to a Portal.

Such a Portal then aggregates all of the connected gadgets, and also exposes a frida-server compatible interface where all of them appear as processes. To the outside it appears as if they’re processes on the same machine as where the Portal is running: they all have unique process IDs if you use enumerate_processes() or frida-ps, and one can attach() to them seamlessly.

In this way, existing Frida tools work exactly the same way – and by enabling spawn-gating on the Portal, any Gadget connecting could be instructed to wait for somebody to resume() it after applying the desired instrumentation. This is the same way spawn-gating works in other situations.

Implementing this was a lot of fun, and it wasn’t long until the first PoC was up and running. It took some time before all the details were clear, though, but this eventually crystallized into the following:

The Portal should expose two different interfaces:

frida-trace -H my.portal.com -n Twitter -i openTo a user this would be pretty simple: just grab the frida-portal binary from our releases, and run it on some machine that the Gadget is able to reach. Then point tools at that – as if it was a regular frida-server.

That is however only one part of the story – how it would be used for simple use-cases. The frida-portal CLI program is actually nothing more than a thin CLI wrapper around the underlying PortalService. This CLI program is just a bit north of 200 lines of code, of which very little is actual logic.

One can also use our frida-core language bindings, for e.g. Python or Node.js, to instantiate the PortalService. This allows configuring it to not provide any control interface, and instead access its device property. This is a standard Frida Device object, on which one can enumerate_processes(), attach(), etc. Or one can do both at the same time.

Using the API also offers other features, but we will get back to those.

Given how useful it might be to run a frida-portal on the public Internet, it was also clear that we should support TLS. As we already had glib-networking among our dependencies for other features, this made it really cheap to add, footprint-wise.

And implementation-wise it’s a tiny bit of logic on the client side, and similarly straight-forward for the server side of the story.

For the CLI tools it’s only a matter of passing --certificate=/path/to/pem.

If it’s a server it expects a PEM-encoded file with a public + private key,

where it will accept any certificate from incoming clients. For a client it’s

also expecting a PEM-encoded file, but only with the public key of a trusted CA,

which the server’s certificate must match or be derived from.

At the API level it boils down to this:

import frida

manager = frida.get_device_manager()

device = manager.add_remote_device("my.portal.com",

certificate="/path/to/pem/or/inline/pem-data")

session = device.attach("Twitter")

…The next fairly obvious feature that goes hand in hand with running a

frida-portal on the public Internet, is authentication. In this case our server

CLI programs now support --token=secret, and so do our CLI tools.

At the API level it’s also pretty simple:

import frida

manager = frida.get_device_manager()

device = manager.add_remote_device("my.portal.com",

token="secret")

session = device.attach("Twitter")

…But this gets a lot more interesting if you instantiate the PortalService through the API, as it makes it easy to plug in your own custom authentication backend:

import frida

def authenticate(token):

# Where `token` might be an OAuth access token

# that is used to grab user details from e.g.

# GitHub, Twitter, etc.

user = …

# Attach some application-specific state to the connection.

return {

'name': user.name,

}

cluster_params = frida.EndpointParameters(authentication=('token', "wow-such-secret"))

control_params = frida.EndpointParameters(authentication=('callback', authenticate))

service = frida.PortalService(cluster_params, control_params)The EndpointParameters constructor also supports other options such as

address, port, certificate, etc.

That leads us to our next challenge, which is how to deal with transient connectivity issues. I did make sure to implement automatic reconnect logic in PortalClient, which is what Gadget uses to connect to the PortalService.

But even if the Gadget reconnects to the Portal, what should happen to loaded scripts in the meantime? And what if the controller gets disconnected from the Portal?

We now have a solution that handles both situations. But it’s opt-in, so the old behavior is still the default.

Here’s how it’s done:

session = device.attach("Twitter",

persist_timeout=30)Now, once some connectivity glitch occurs, scripts will stay loaded on the remote end, but any messages emitted will get queued. In the example above, the client has 30 seconds to reconnect before scripts get unloaded and data is lost.

The controller would then subscribe to the Session.detached signal to be able

to handle this situation:

def on_detached(reason, crash):

if reason == 'connection-terminated':

# Oops. Better call session.resume()

session.on('detached', on_detached)Once session.resume() succeeds, any buffered messages will be delivered and

life is good again.

The above example does gloss over a few details such as our current Python bindings’ finicky threading constraints, but have a look at the full example here. (This will become a lot simpler once we port our Python bindings off our synchronous APIs and onto async/await.)

Alright, so next up we’ve got a Portal running in a data center in the US, but the Gadget is at my friend’s place in Spain, and I’m trying to control it from Norway using frida-trace. It would be a shame if the script messages coming from Spain would have to cross the Atlantic twice, not just because of the latency, but also the AWS bill I’ll have to pay next month. Because I’m dumping memory right now, and that’s quite a bit of traffic right there.

This one’s a bit harder, but thanks to libnice, a lightweight and mature ICE implementation built on GLib, we can go ahead and use that. Given that GLib is already part of our stack – as it’s our standard library for C programming (and our Vala code compiles to C code that depends on GLib) – it’s a perfect fit. And this is very good news footprint-wise.

As a user it’s only a matter of passing --p2p along with a STUN server:

$ frida-trace \

-H my.portal.com \

--p2p \

--stun-server=my.stunserver.com \

-n Twitter \

-i open(TURN relays are also supported.)

The API side of the story looks like this:

session.setup_peer_connection(stun_server="my.stunserver.com")That’s all there is to it!

You may have noticed that our Gadget has been a recurring theme so far. I’m not very excited about adding features that only apply to one mode, such as only Injected mode but not Embedded mode. So this was something that came to mind quite early on, that Portals had to be a universally available feature.

So say my buddy is reversing a target on his iPhone from his living room in Italy, and I’d like to join in on the fun, he can go ahead and run:

$ frida-join -U ReversingTarget my.portal.com cert.pem secretNow I can jump in with the Frida REPL:

$ frida \

-H my.portal.com \

--certificate=cert.pem \

--token=secret \

--p2p \

--stun-server=my.stunserver.com \

-n ReversingTargetAnd if my buddy would like to use the API to join the Portal, he can:

session = frida.get_usb_device().attach("ReversingTarget")

membership = session.join_portal("my.portal.com",

certificate="/path/to/cert.pem",

token="secret")Something I’ve been wanting to build since before Frida was born, is an online collaborative reversing app. Back in the very beginning of Frida, I built a desktop GUI that had integrated chat, console, etc. My not-so-ample spare-time was a challenge, however, so I eventually got rid of the GUI code and decided to focus on the API instead.

Now we’re in 2021, and single-page apps (SPAs) can be a really appealing option in many cases. I’ve also noticed that there’s been quite a few SPAs built on top of Frida, and that’s super-exciting! But what I’ve noticed when toying with SPAs on my own, is that it’s quite tedious to have to write the middleware.

Well, with Frida 15 I had to make some protocol changes to accomodate the features that I’ve covered so far, so it also seemed like the right time to really break the protocol and go ahead with a major-bump. This is something I’ve been trying to avoid for a long time, as I know how painful they are to everyone, myself included.

So now browsers can finally join in on the fun, without any middleware needed:

async function start() {

const ws = wrapEventStream(new WebSocket(`ws://${location.host}/ws`));

const bus = dbus.peerBus(ws, {

authMethods: [],

});

const hostSessionObj = await bus.getProxyObject('re.frida.HostSession15',

'/re/frida/HostSession');

const hostSession = hostSessionObj.getInterface('re.frida.HostSession15');

const processes: HostProcessInfo[] = await hostSession.enumerateProcesses({});

console.log('Got processes:', processes);

const target = processes.find(([, name]) => name === 'hello2');

if (target === undefined) {

throw new Error('Target process not found');

}

const [pid] = target;

console.log('Got PID:', pid);

const sessionId = await hostSession.attach(pid, {

'persist-timeout': new Variant('u', 30)

});

…

}(Full example can be found in examples/web_client.)

This means that Frida’s network protocol is now WebSocket-based, so browsers can finally talk directly to a running Portal/frida-server, without any middleware or gateways in between.

I didn’t want this to be a half-baked story though, so I made sure that the peer-to-peer implementation is built on WebRTC data channels – this way even browsers can communicate with minimal latency and help keep the AWS bill low.

Once we’ve built a web app to go with our Portal, which is speaking WebSocket natively, and thus also HTTP, we can also make it super-easy to serve that SPA from the same server:

$ ./frida-portal --asset-root=/path/to/web/appThis is also easy at the API level:

control_params = frida.EndpointParameters(asset_root="/path/to/web/app")

service = frida.PortalService(cluster_params, control_params)A natural next step once we have a controller, say a web app, is that we might want collaboration features where multiple running instances of that SPA are able to communicate with each other.

Given that we already have a TCP connection between the controller and the PortalService, it’s practically free to also let the developer use that channel. For many use-cases, needing an additional signaling channel brings a lot of complexity that could be avoided.

This is where the new Bus API comes into play:

import frida

def on_message(message, data):

# TODO: Handle incoming message.

pass

manager = frida.get_device_manager()

device = manager.add_remote_device("my.portal.com")

bus = device.bus

bus.on('message', on_message)

bus.attach()

bus.post({

'type': 'rename',

'address': "0x1234",

'name': "EncryptPacket"

})

bus.post({

'type': 'chat',

'text': "Hey, check out EncryptPacket everybody"

})Here we’re first attaching a message handler so we can receive messages from the Portal.

Then we’re calling attach() so that the Portal knows we’re interested

in communicating with it. (We wouldn’t want it sending messages to controllers

that don’t make use of the Bus, such as frida-trace.)